NVIDIA Next-Gen Hopper GH100 Data Center GPU Unveiled: 4nm, 18432 Cores, 700W Power Draw, 4000 TFLOPs of Mixed Precision Compute | Hardware Times

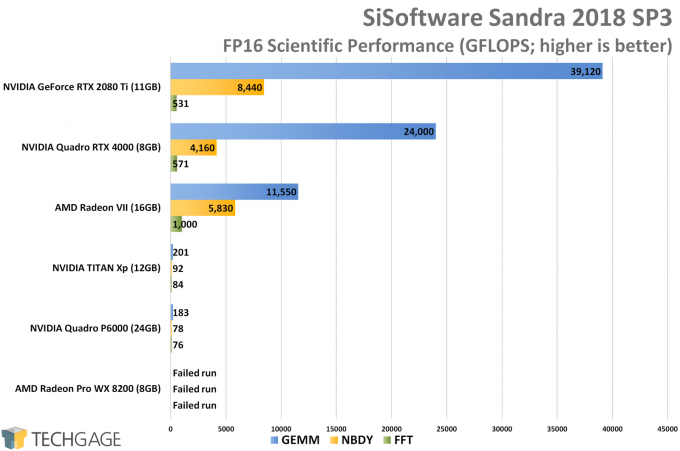

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation