Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

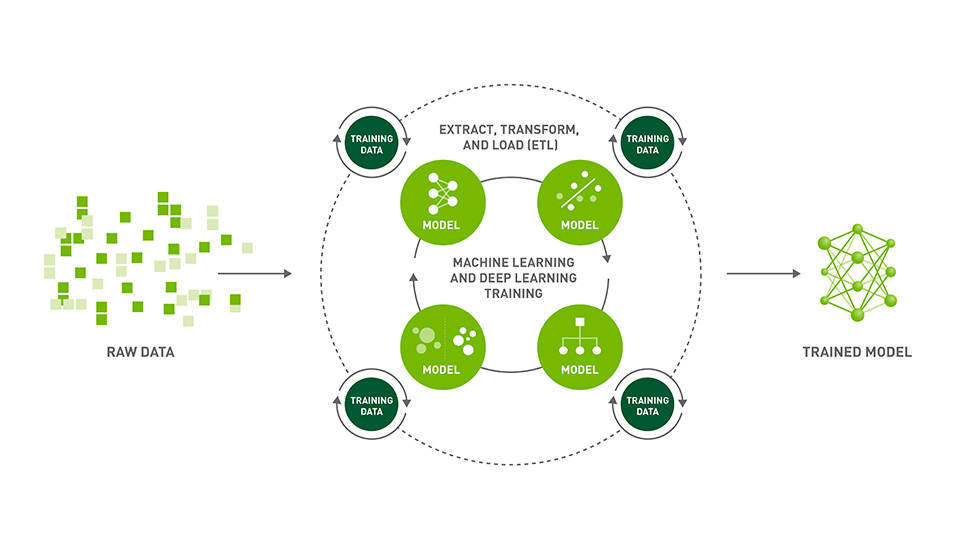

Accelerating Scikit-Image API with cuCIM: n-Dimensional Image Processing and I/O on GPUs | NVIDIA Technical Blog

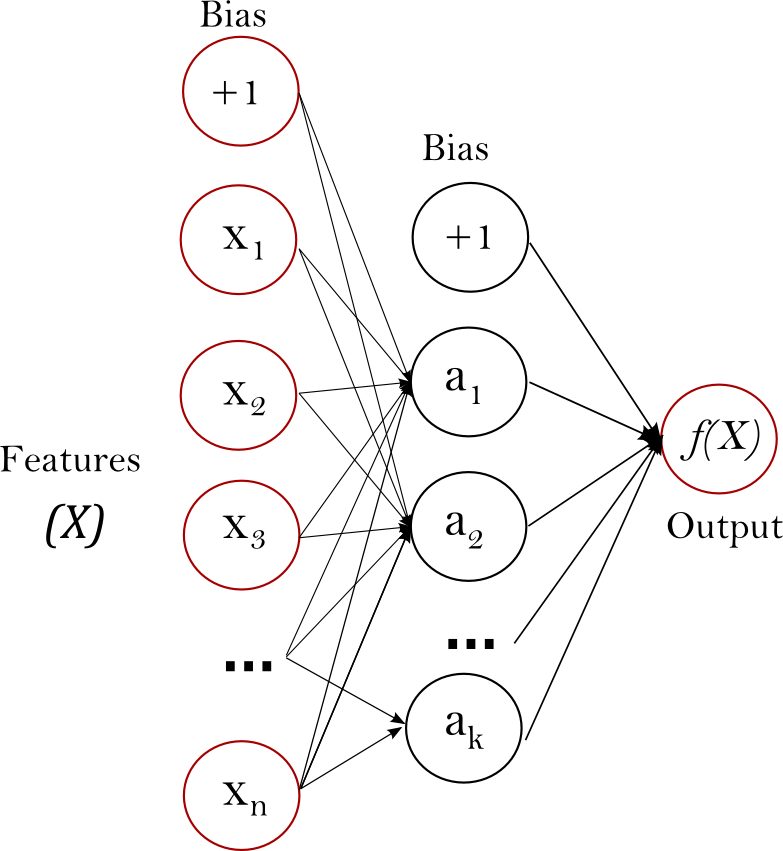

Best Python Libraries for Machine Learning and Deep Learning | by Claire D. Costa | Towards Data Science